Alright so for my final blog I'm going to do something that I have no doubt that many of my classmates have already done: My personal gripes with 2LoC and what I would do to change it. Without further preamble, let's dive headlong into it!

Camera

First things first, setting up the camera is a massive chore to me. It's the kind of thing where you don't need to make a lot of cameras unless you're doing some special effects (i.e. reflection) so setting up a function to make it easier feels like it might me spending more time making a solution than you do on the actual problem. For an idea of why I might hate the camera, here's sample code to get a perspective camera working:

cameraEnt =

pref_gfx::Camera(m_entityMgr.get(), m_cpoolMgr.get())

.Near(1.0f)

.Far(1000.0f)

.VerticalFOV(math_t::Degree(60.0f))

.Position(math_t::Vec3f(0.0f, 5.0f, 5.0f))

.Create(m_win.GetDimensions());

{

//Set camera settings

math_t::AspectRatio ar(math_t::AspectRatio::width((tl_float)m_win.GetWidth()),

math_t::AspectRatio::height((tl_float)m_win.GetHeight()));

math_t::FOV fov(math_t::Degree(60.0f), ar, math_t::p_FOV::vertical());

math_proj::FrustumPersp::Params params(fov);

params.SetFar(1000.0f).SetNear(1.0f);

math_proj::FrustumPersp fr(params);

fr.BuildFrustum();

cameraEnt->GetComponent<gfx_cs::Camera>()->SetFrustum(fr);

}

Now, setting all the variables such as field of view, near and far during the camera construction only to have to re-declare this stuff when making a frustum feels REALLY redundant. I would think that setting this stuff up in the camera would automatically handle setting up the frustum during creation. Now you might say "Oh you have no indicator to set it as perspective, that's why!" Well, there is a function where you set perspective with a boolean value, but this doesn't seem to do anything. Overall it makes the whole process of making a camera, one of the most necessary entities in a game, feel like it has six more lines than it should while being simultaneously unintuitive as I don't think most users would think to create a frustum at this point in creation of the game.

Shader Limitations

Next up is the limitations on how you can use shaders with 2LoC. Creating materials and such is actually pretty simple and I do like it...But being unable to access the geometry shader (as well as the other lesser known ones) ends up hampering development. Case in point: Dealing with particles. Normally for a particle you could just send in a single vertex and then create a quad in the geometry shader allowing for things to be much more efficient in terms of calculations since you just have to apply physics calculations to the single vertex in the shader versus doing it 4 times (assuming you have vertex indexing in the engine!) on each corner of a quad. So this not only becomes a limitation of what one can do with shaders, it also becomes a limitation of how efficient you can make your processes and hurts development in the long-run!

General user unfriendliness

There's a few other problems I can go into such as the extensive namespaces which make dealing with things difficult (I have had many times where I couldn't copy a material over to another material because as it turns out material 1 is in namespace and material 2 is in a different one...Despite both being materials) as well as things that the engine could benefit from but doesn't have for school reasons (i.e. Scripting and Particles) but above all else is just how obnoxious the engine can be to use. There are a lot of tricks that you need to sort of work out for yourself in order to get some things to work and it might be easier if we had some rudimentary documentation to go by (i.e. A list of what the different functions do) but without this it makes it very challenging to work out how to do what should be very simple things. For example, when I create an entity during runtime its mesh and material won't appear unless I call the appropriate systems and say "Initialize(ent)". I would not have guessed that I need to do this until a friend pointed it out to me.

Overall, the engine seems to suffer from a serious problem in that it's usable once you have become used to (which isn't really praise since it can apply to ANYTHING) the engine but it's very unfriendly to new users and would benefit from a bit of documentation to go with the samples provided. Even something as simple as "Here is a list of basic functions you should know but aren't immediately found and what they do:" Just to provide users with the ability to easily reference something like they would with Unity's documentation. In the end, the engine has a long way to go before I'd say it would work well as an engine for general use.

That's it! Sorry if I seem a little bit harsh but this engine has bitten me too many times to count and has left me a bit miffed. I hope you enjoyed the read, cheers!

Wednesday, 3 December 2014

Friday, 28 November 2014

Game Design Blog #3

This week I'm going to talk about one of my favourite games, and one of the reasons I love it: Its pacing! The game in question, is the lovely, the fantastic....

So first things first, I will end up going more into depth over the overall pace of the game rather than the pace in specific levels. This is because there isn't a whole lot to write about in terms of the pacing of levels, it's not that it's bad it's just that it's pretty standard. Now then, why do I love the overall pacing for the game? Well, to put it simply the game does a good job of keeping a steady but ever-building pace. When the game starts (and after the tutorial), the player only has 2 members on their squad and they are given 4 missions which reward the players with additional squad members. By doing this the player is given a chance to experiment with their squad without the number of unused squadmates getting out of hand. After a point in the game the player unlocks an additional 4 missions which are much the same, allowing for a break before the player gets new squad members.

Another interesting thing is the Loyalty Missions. These are missions which require the player to go in with 1 squad mate preselected for them. This gives the player a chance to interact with characters they might not necessarily interact with and get used to working with them. This also serves as a strong way of preparing the player for the finale of the game in which they go in with every squad member and have to divide it up into different teams while making use of their different skill sets.

In terms of the story pacing, the game does an excellent job of providing the players with points that ramp up into action while providing brief interludes where the player doesn't have a lot of stress on them but the game isn't boring, i.e. the missions to acquire new squad members are in the slower points of the game and serve to help build up anticipation for the next big missions. The overall pacing for the story does a good job of building the players anticipation and interest until the big finale of the game which puts the player in a position where they simply do not want to stop until the end of the game.

Overall, the pacing for Mass Effect 2 is quite good at maintaining the players interest and allowing them plenty of time to learn the skills and squad members that they gain over the course of the game.

That's it for now!

Game Design Blog #2

Alright, time for Game Design blog numero two! Unlike the last blog I'm not going to talk about a specific game, rather I'm going to talk about a control scheme that has become so prevalent in a certain genre of game and put in some guesses as to how it as persisted for so long in gaming culture. What control scheme might I be talking about? Well...

That's it for now!

That's right, I'm going to be talking about the First Person Shooter (and Third Person Shooter) control scheme on the PC! The above picture is for the game Team Fortress 2 so there are some parts of it that aren't going to carry across games, but the main things that you need to focus on are WASD, R, Ctrl and Space keys. Now first of all, what do these keys represent? Well WASD is movement in the 4 cardinal directions relative to the player, R is for reloading, Ctrl is usually for crouching and Space is used for jumping. This layout has been carried across many, MANY games in the past. Why might this be?

Well if you look at the layout of the WASD keys, they're positioned in such a way that the player can leave their left hand in a position they normally have it on the keyboard allowing the fingers to line up in a normal and comfortable way. Another advantage is that the ring, middle and index fingers line up vertically with the 4 keys in to three distinct columns, allowing for easy use. Finally, the WASD keys form a sort of compass by which can easily be interpreted by the player. This analogy between the player and the game works incredibly well as the keyboard movement of forward, left, right and backward carries over perfectly between the players perspective and the perspective of the character in game.

Now let's take a look at the placement of the other keys:The position of the R key keeps it within easy reach of the player's index finger and it's also tied to a button that players can easily reach based on muscle memory as they only have to think about press R to Reload. The Space key would have been chosen as jumping is an action that players will end up using quite frequently which makes its size and position relative to the players thumb allows for easy and frequent use. Lastly, the CTRL key makes use of the players remaining finger while also staying within easy reach of a single hand.

Overall this layout allows the player to easily use one hand to perform all their movements and any necessary miscellaneous movements. However, shooters also rely heavily on the player's mouse which acts as a camera control through movement. This allows for rapid yet precise manipulation of the player's perspective. The left and right mouse buttons will also see use for a weapon's primary (left) and alternate (right) attacks. This works well as the left click on the mouse is often used for most primary actions such as selecting icons or pressing buttons, allowing the player to make an easy association between the click and the action. The same can be said for the right click which sees use for additional actions in day-to-day use.

Overall, the control scheme for shooters is one that allows the user to make many easy associations with the use of the keyboard and immediate actions in the game, as well as analogies which allow the user to perform actions with the keyboard without having to put too much thought into them. This scheme is very sensible and will likely be around for many more years to come.

That's it for now!

Game Engines 4: Valve Hammer Editor

Okay so this blog will be a bit more jumbled since I'm going to be talking primarily about 2 very different topics: Binary Space Partitioning and different methods for culling. The one thing that links these two topics is that I'll be talking about them in the context Valve's Hammer Editor (a level-editing tool for the Source Engine), and most of the talks will be in regards to how it works with Team Fortress 2 as this is the area in which I have the most experience using the editor.

First of all, let's talk about what Binary Space Partitioning is. BSP will essentially parse a file (such as a level) and continuously subdivide it into different nodes until a requirement is met. This allows a level to be broken down into far more manageable chunks. Unsurprisingly, this method was once (and still in fact today in some cases) seen primarily in Shooters as they usually end up having a variety of rooms which can be interpreted as nodes on a tree graph. In this scenario, using a BSP allows the engine to cut down on the amount of checks it needs to make for render culling as it can easily work out all the rooms attached to the one the player is currently standing in.

The practicality of this system in regards to shooters makes it no surprise that it's used in the Source engine which is primarily used to develop shooters. The system can be further enhanced by using a portal-based rendering (which is another prominent feature in the Source engine). Portal-based rendering is essentially the process of treating every door or window connecting two areas as a 'portal' that the player can see through. When the engine goes to render a scene it will use frustum culling to remove any objects that are not within view of the player...But it then encounters a problem where it will try to render an object behind a wall. In order to cut down on this, we only render the objects in one room before casting frustums from each visible portal to figure out what objects there are in a different room which we can see, once the engine knows what objects we can see in other rooms it adds them to the render list. With BSP and portal-rendering combined we can pretty effectively cut down on the demands put on the renderer as we reduce the number of rooms that are being checked for rendering and cutting down the size of the render list by a significant amount.

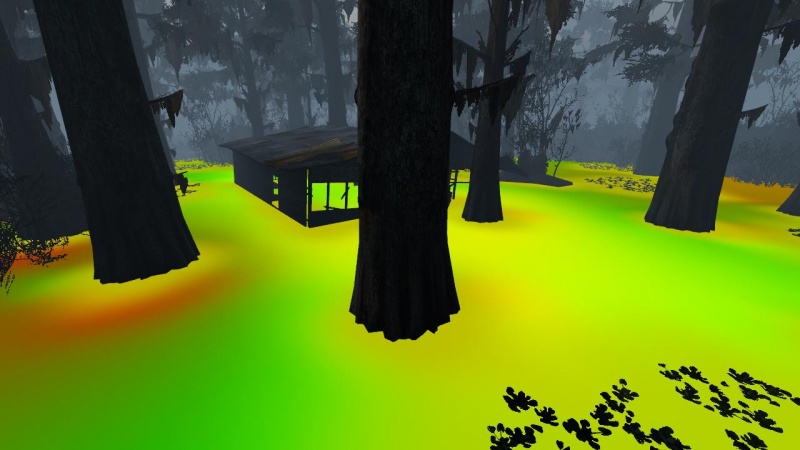

Now, how does the engine know where to find the portals as well as figure out ways to make subdividing the level easier? Simple, you leave it up to the level designer (or atleast someone who is making the level)! One of the things about the Hammer Editor is that it has a LOT of materials that do not get drawn during render time which are meant to be used more for letting the engine have an easier time or for gameplay (i.e. Spawn areas will often use a material that has a No Draw texture, but it will have gameplay triggers tied to the box representing the spawn). For some idea of what the editor looks like while you work in it, here's an idea:

First of all, let's talk about what Binary Space Partitioning is. BSP will essentially parse a file (such as a level) and continuously subdivide it into different nodes until a requirement is met. This allows a level to be broken down into far more manageable chunks. Unsurprisingly, this method was once (and still in fact today in some cases) seen primarily in Shooters as they usually end up having a variety of rooms which can be interpreted as nodes on a tree graph. In this scenario, using a BSP allows the engine to cut down on the amount of checks it needs to make for render culling as it can easily work out all the rooms attached to the one the player is currently standing in.

The practicality of this system in regards to shooters makes it no surprise that it's used in the Source engine which is primarily used to develop shooters. The system can be further enhanced by using a portal-based rendering (which is another prominent feature in the Source engine). Portal-based rendering is essentially the process of treating every door or window connecting two areas as a 'portal' that the player can see through. When the engine goes to render a scene it will use frustum culling to remove any objects that are not within view of the player...But it then encounters a problem where it will try to render an object behind a wall. In order to cut down on this, we only render the objects in one room before casting frustums from each visible portal to figure out what objects there are in a different room which we can see, once the engine knows what objects we can see in other rooms it adds them to the render list. With BSP and portal-rendering combined we can pretty effectively cut down on the demands put on the renderer as we reduce the number of rooms that are being checked for rendering and cutting down the size of the render list by a significant amount.

Now, how does the engine know where to find the portals as well as figure out ways to make subdividing the level easier? Simple, you leave it up to the level designer (or atleast someone who is making the level)! One of the things about the Hammer Editor is that it has a LOT of materials that do not get drawn during render time which are meant to be used more for letting the engine have an easier time or for gameplay (i.e. Spawn areas will often use a material that has a No Draw texture, but it will have gameplay triggers tied to the box representing the spawn). For some idea of what the editor looks like while you work in it, here's an idea:

I'm sorry to have brought up the Source Engine again, but I just find it to be such a great little engine that has lead to a lot of interesting games. While it may not be on the same level of flexibility as Unreal or Unity, it's still capable of producing some truly magnificent games and its level editor can give a lot of unique insights into how the engine as a whole operates (from interpreting game systems to rendering). For an idea of how flexible the level editor is, it allows for something that could be seen as making rudimentary scripts for its gameplay systems (i.e. this object will move when this condition is met) and a level designer can VERY EASILY set this all up.

That's about it for my ramble about Source. If you made it this far, then congratulations. Cheers!

(Image of the Hammer Editor gotten from: http://www.moddb.com/engines/source/images/hammer-editor )

Friday, 7 November 2014

Game Engines 3: Scripting

And now for Game Engines blog #3! This week I'll be giving a breakdown of Scripting and how it applies to game engines. First of all, what is scripting? Scripting is essentially creating a smaller program that can be executed by the game They come with a variety of perks as well which make them invaluable in game development: They can be easily used in an ECS; They can compile a lot faster than normal C++ files;And be recompiled at runtime. One of the most important things is that they can easily be edited and programmed.

First things first, how do scripts work? A script can be written in notepad (although you really shouldn't since notepad is horrible to work with) like a text file and is then interpreted by the system in such a way that it knows how to act in terms of the game system. It's pretty straightforward and it's more or less identical to how one would set up a program in C++. In fact, when you compare how scripting is supposed to work and the method in which we create our custom components for 2LoC, it's very similar with the main difference being that the custom components take longer to compile than the script.

Now, why do these features make them so great? Well for starters when it comes to an ECS you can make a script component which only needs a path assigned to it to be created, much like you would with a mesh component. This then saves you the trouble of creating custom components and custom component systems like you have to for creating a custom component with 2LoC and cuts down on the number of files that have to be linked during compilation. It also allows you to keep the number of different components you need to have in your ECS to a minimum as you aren't forced to create unique ones for each circumstance and can instead rely on multiple script components to do the same job.

Scripting also has a few perks in terms of hardware, as mentioned a few times earlier. Their very nature eliminates the need to create and link different objects for classes and cuts down on the overall amount of code drastically, allowing for developers to have much faster compile times. The other advantage to working with scripts is that they can easily be recompiled without interrupting the system overall. This is actually a huge advantage as it allows you to change code on-the-fly without having to rebuild a project. All you have to do is open the script, edit and save then recompile the scripts.

Lastly, scripts are useful for non-programming developers. They are generally easier to grasp than the underlying code of engines and are easier to play around with. The ability to edit and reload them without interrupting the game is also very useful in terms of design as it allows designers to see changes to values immediately rather than having to stop and recompile an entire project or ask a programmer to make the change for them.

Overall, scripting is an invaluable tool in game development. It allows us to save time in various areas from building the final product to quickly testing the game as we go. It also helps by reducing the complexity of developing the game and can help drastically improve a system's versatility: Case in point, Unity is used to create a large range of games in different genres while developers can only add their own code through scripts. The only difficulty comes in setting up a scripting language to work with your engine, but once that's done you've overcome the hardest problem.

Until next time!

First things first, how do scripts work? A script can be written in notepad (although you really shouldn't since notepad is horrible to work with) like a text file and is then interpreted by the system in such a way that it knows how to act in terms of the game system. It's pretty straightforward and it's more or less identical to how one would set up a program in C++. In fact, when you compare how scripting is supposed to work and the method in which we create our custom components for 2LoC, it's very similar with the main difference being that the custom components take longer to compile than the script.

Now, why do these features make them so great? Well for starters when it comes to an ECS you can make a script component which only needs a path assigned to it to be created, much like you would with a mesh component. This then saves you the trouble of creating custom components and custom component systems like you have to for creating a custom component with 2LoC and cuts down on the number of files that have to be linked during compilation. It also allows you to keep the number of different components you need to have in your ECS to a minimum as you aren't forced to create unique ones for each circumstance and can instead rely on multiple script components to do the same job.

Scripting also has a few perks in terms of hardware, as mentioned a few times earlier. Their very nature eliminates the need to create and link different objects for classes and cuts down on the overall amount of code drastically, allowing for developers to have much faster compile times. The other advantage to working with scripts is that they can easily be recompiled without interrupting the system overall. This is actually a huge advantage as it allows you to change code on-the-fly without having to rebuild a project. All you have to do is open the script, edit and save then recompile the scripts.

Lastly, scripts are useful for non-programming developers. They are generally easier to grasp than the underlying code of engines and are easier to play around with. The ability to edit and reload them without interrupting the game is also very useful in terms of design as it allows designers to see changes to values immediately rather than having to stop and recompile an entire project or ask a programmer to make the change for them.

Overall, scripting is an invaluable tool in game development. It allows us to save time in various areas from building the final product to quickly testing the game as we go. It also helps by reducing the complexity of developing the game and can help drastically improve a system's versatility: Case in point, Unity is used to create a large range of games in different genres while developers can only add their own code through scripts. The only difficulty comes in setting up a scripting language to work with your engine, but once that's done you've overcome the hardest problem.

Until next time!

Friday, 17 October 2014

Game Engines 2: Components

Time for blog #2 for Game Engines! Today I'm going to be talking about one particular topic that has become very prominent in the Game Industry and in game engines in general: Component based systems!

So first of all what is a component based system? Essentially a component system relies on two things: Entities and Components. The Entity is the container for all the components and is basically an object in the game world. The entity only has to worry about having a tag and an ID (and even then, an ID is usually enough) to allow for developers to easily track down an entity in the system. On the other hand we have the Components in the system which is the meat of the object that can then make it unique. Components can range from things such as transformations to meshes to scripts and contain different values that allow the entity to interact with the game world.

So now that we know what an Entity and a Component are, what does this mean? Basically we can have a huge range of flexibility with our Entities. In previous system designs that rely heavily on inheritance we would have to deride from a long range of classes to create an entity that can do what we want (i.e. Entity->Character->Enemy->Flying Enemy->Drone) and we would have a harder time crossing over features from different classes. With components we would only have to worry about adding something such as a Flying component, Enemy component and any components that might be specific to the Drone enemy. This allows for anyone working with the engine to easily make an entity that can fit their needs (so long as they can make their own components).

That's the general summary of how an Entity Component System works on the surface. In my next blog I'm going to go into some of the lesser well-known components that you can use in an ECS (and that isn't currently implemented into 2LoC: Scripting!

So first of all what is a component based system? Essentially a component system relies on two things: Entities and Components. The Entity is the container for all the components and is basically an object in the game world. The entity only has to worry about having a tag and an ID (and even then, an ID is usually enough) to allow for developers to easily track down an entity in the system. On the other hand we have the Components in the system which is the meat of the object that can then make it unique. Components can range from things such as transformations to meshes to scripts and contain different values that allow the entity to interact with the game world.

So now that we know what an Entity and a Component are, what does this mean? Basically we can have a huge range of flexibility with our Entities. In previous system designs that rely heavily on inheritance we would have to deride from a long range of classes to create an entity that can do what we want (i.e. Entity->Character->Enemy->Flying Enemy->Drone) and we would have a harder time crossing over features from different classes. With components we would only have to worry about adding something such as a Flying component, Enemy component and any components that might be specific to the Drone enemy. This allows for anyone working with the engine to easily make an entity that can fit their needs (so long as they can make their own components).

That's the general summary of how an Entity Component System works on the surface. In my next blog I'm going to go into some of the lesser well-known components that you can use in an ECS (and that isn't currently implemented into 2LoC: Scripting!

Friday, 26 September 2014

Game Design Blog #1

And hot-on-the-heels of my Game Engines blog is Game Design! For this one I'm not totally sure what to do, so I'm going to play it safe and talk about the recent topics and how they relate to one of the few games I have played recently: Tales of Xillia 2! The two topics I'm going to focus most prominently on are the controls and the UI.

First a little summary of the game: Tales of Xillia 2 is a role-playing game with instanced, party-based combat. When the player enters combat they are in a circular arena with a character they control and up to 3 AI (or player controlled) characters. Now on to gameplay!

The controls of the game, on a PS3 controller. The game itself switches between two states (not including things like menus and not going into a LOT of detail): The overworld state and the combat state. The overworld state is when the player is able to run around freely (i.e. towns, fields and dungeons), obviously this has been simplified but that's more to cut down on length. In this state the player can encounter monsters (in the latter two) and controls by using the left joystick to move their avatar and the X key to interact with objects. The player can then transition to the combat state by colliding with an enemy on-screen and in this state the controls become drastically more complex. The player moves their character by moving the left joystick left or right (the player is on a fixed axes for the most part), but can press L2 to enter a 'free-run' mode where they can move in any direction on the field with the left joystick. The player can attack and guard using the X and square buttons respectively. The player can also use the circle button with the left joystick or the just the right joystick to activate special attacks. This is more or less the basic gameplay (not going into the depth of special stuff). Now a quick breakdown of how intuitive the gameplay feels!

The overworld gameplay doesn't have that much that needs to be said about it. It worked pretty well but it wasn't exactly special. The gameplay in the combat state though flows very smoothly, the basic attack handles very smoothly and the idea of tying the special attacks to the movement in a small-but-unaffecting way actually helps make the gameplay much easier to handle without breaking the flow. I don't think that there's anything that could be done to improve the gameplay as it requires the least amount of movements on the players part to change actions.

One gameplay mechanic for combat that I had previously omitted was the ability to partner with one other party member to work with them in combat (i.e. the character can block to stop you from being attacked from behind or flank an enemy you're fighting). The way a player links with another character is by pressing on the D-pad in the direction of the character's icon on the HUD. My reason for listing this is separately from everything else is to tie into the....

UI! The UI for the overworld state is pretty straight forward with a mini-map that shows where the player and other things of note are, as well as the option for pop-ups like objectives. It's very simple to understand and isn't intrusive, so it gets a pass! The combat state UI is very much geared towards melding with the controls by having it so that important meters are on the left and right while the names and stats of the 4 party members is laid out in a diamond on the bottom-center portion of the screen. This last part works very well with the the ability to link with the other party members as they line-up perfectly with the D-pad so that it's very intuitive. Overall, the UI is very clean and easy to read (for me, anyway). But I'll let you decide by finally providing a screenshot of the game!

And I'm not going to be going into super-fine detail on everything (I'm sure you can see that there are quite a few finer detalis) but that will just entail going into a lot of depth that you learn over-time.

At the end of the day, the game is very well-designed and friendly to both old and new players. Though far more importantly than that: The game is FUN!

And with that, I'm going to call this blog done...Namely because I'm rambling.

Thanks for reading, cheers!

First a little summary of the game: Tales of Xillia 2 is a role-playing game with instanced, party-based combat. When the player enters combat they are in a circular arena with a character they control and up to 3 AI (or player controlled) characters. Now on to gameplay!

The controls of the game, on a PS3 controller. The game itself switches between two states (not including things like menus and not going into a LOT of detail): The overworld state and the combat state. The overworld state is when the player is able to run around freely (i.e. towns, fields and dungeons), obviously this has been simplified but that's more to cut down on length. In this state the player can encounter monsters (in the latter two) and controls by using the left joystick to move their avatar and the X key to interact with objects. The player can then transition to the combat state by colliding with an enemy on-screen and in this state the controls become drastically more complex. The player moves their character by moving the left joystick left or right (the player is on a fixed axes for the most part), but can press L2 to enter a 'free-run' mode where they can move in any direction on the field with the left joystick. The player can attack and guard using the X and square buttons respectively. The player can also use the circle button with the left joystick or the just the right joystick to activate special attacks. This is more or less the basic gameplay (not going into the depth of special stuff). Now a quick breakdown of how intuitive the gameplay feels!

The overworld gameplay doesn't have that much that needs to be said about it. It worked pretty well but it wasn't exactly special. The gameplay in the combat state though flows very smoothly, the basic attack handles very smoothly and the idea of tying the special attacks to the movement in a small-but-unaffecting way actually helps make the gameplay much easier to handle without breaking the flow. I don't think that there's anything that could be done to improve the gameplay as it requires the least amount of movements on the players part to change actions.

One gameplay mechanic for combat that I had previously omitted was the ability to partner with one other party member to work with them in combat (i.e. the character can block to stop you from being attacked from behind or flank an enemy you're fighting). The way a player links with another character is by pressing on the D-pad in the direction of the character's icon on the HUD. My reason for listing this is separately from everything else is to tie into the....

UI! The UI for the overworld state is pretty straight forward with a mini-map that shows where the player and other things of note are, as well as the option for pop-ups like objectives. It's very simple to understand and isn't intrusive, so it gets a pass! The combat state UI is very much geared towards melding with the controls by having it so that important meters are on the left and right while the names and stats of the 4 party members is laid out in a diamond on the bottom-center portion of the screen. This last part works very well with the the ability to link with the other party members as they line-up perfectly with the D-pad so that it's very intuitive. Overall, the UI is very clean and easy to read (for me, anyway). But I'll let you decide by finally providing a screenshot of the game!

And I'm not going to be going into super-fine detail on everything (I'm sure you can see that there are quite a few finer detalis) but that will just entail going into a lot of depth that you learn over-time.

At the end of the day, the game is very well-designed and friendly to both old and new players. Though far more importantly than that: The game is FUN!

And with that, I'm going to call this blog done...Namely because I'm rambling.

Thanks for reading, cheers!

Game Engines 1: The Source

Alright, after a long delay I'm back! Jumping right into this I'm gonna be talking about one of my favourite game engines for this new class, the wonderful engine that has given us so many games by Valve: The Source Engine! I'll be doing a quick-review off it from its beginnings to its future while going through some of the special features implemented it as time went by, but first I'll give a quick summary of what the engine is!

As mentioned before, the Source Engine is Valve's in-house game engine. It was designed to be used for first-person shooters (a genre which Valve excels in) but has been successfully repurposed to be used for other genres (such as top-down shooters like Alien Swarm). It evolved from the GoldSource Engine which was built off of the Quake Engine.

Now, there's something rather special about the Source Engine in relation to our class: It is made entirely in C++ (well, C++ and then it uses OpenGL and DirectX3D for shaders). This means that there are only two things to stop a UOIT student from making an engine as powerful as Source: Experience and manpower. I consider that fantastic motivation to start learning the ins-and-outs of engines to make my own. Anyway, on to the features that were added!

So the Source Engine is constantly evolving with new games adding new features to it. For example Left 4 Dead 2 added dynamic 3D wounds for the engine, these essentially being a unique way of creating wounds on characters that would change the appearance of the model in a drastic way (i.e. arms falling off and having the bone sticking out). The game DOTA 2 introduced keyframed vertex animation to the engine and then there's a much longer list of features (with things like blending skeletal animation, inverse kinematics, dynamic water effects, etc.). The development of the engine does not have them keeping track of it by versions like you would typically see (i.e. version 1.0.0, version 1.0.1, etc.), instead simply updating in smaller or bigger ways as required.

Looking at its design, the Source Engine has the capacity to constantly grow as time goes on and add new features. Though this approach would ultimately prove a bad idea as new techniques and design patterns are constantly emerging and the base code for the engine simply could not easily be altered without potentially ruining the engine. This seems to be something that Valve has picked up on as they are currently developing (or, if rumors are to be believed, have already released) a Source Engine 2. This engine should see an overhaul to the lower-level architecture of the system so that it could run much more efficiently and have greater potential than the previous one.

On a semi-unrelated note though, the Source Engine is also a great example of how powerful a game engine can be in the right hands. Valve has used their engine not only to make games but also to make offline tools such as the Source Filmmaker and Hammer Editor which they could then use to improve their production pipelines. The Source Filmmaker allows for easy development of in-engine cutscenes by allowing them to create a scene in the engine like you would with Maya, but it instead uses the engines lighting systems. The best example of its potential is the Meet the Team videos for Team Fortress 2. Meanwhile, the Hammer Editor functions as the company's in-house map editing tool, Not only does it allow users to create the shape of the map, it also allows them to set up things such as game triggers which the engine can then use to construct the full level experience.

Overall, the Source Engine is a fantastic piece of technology, in my mostly biased opinion. Unfortunately, I have not ever used it so I could not make any comments on how user-friendly it is or some of the downsides it has developing for it. I hope you've enjoyed reading this rambling blog!

Cheers!

As mentioned before, the Source Engine is Valve's in-house game engine. It was designed to be used for first-person shooters (a genre which Valve excels in) but has been successfully repurposed to be used for other genres (such as top-down shooters like Alien Swarm). It evolved from the GoldSource Engine which was built off of the Quake Engine.

Now, there's something rather special about the Source Engine in relation to our class: It is made entirely in C++ (well, C++ and then it uses OpenGL and DirectX3D for shaders). This means that there are only two things to stop a UOIT student from making an engine as powerful as Source: Experience and manpower. I consider that fantastic motivation to start learning the ins-and-outs of engines to make my own. Anyway, on to the features that were added!

So the Source Engine is constantly evolving with new games adding new features to it. For example Left 4 Dead 2 added dynamic 3D wounds for the engine, these essentially being a unique way of creating wounds on characters that would change the appearance of the model in a drastic way (i.e. arms falling off and having the bone sticking out). The game DOTA 2 introduced keyframed vertex animation to the engine and then there's a much longer list of features (with things like blending skeletal animation, inverse kinematics, dynamic water effects, etc.). The development of the engine does not have them keeping track of it by versions like you would typically see (i.e. version 1.0.0, version 1.0.1, etc.), instead simply updating in smaller or bigger ways as required.

Looking at its design, the Source Engine has the capacity to constantly grow as time goes on and add new features. Though this approach would ultimately prove a bad idea as new techniques and design patterns are constantly emerging and the base code for the engine simply could not easily be altered without potentially ruining the engine. This seems to be something that Valve has picked up on as they are currently developing (or, if rumors are to be believed, have already released) a Source Engine 2. This engine should see an overhaul to the lower-level architecture of the system so that it could run much more efficiently and have greater potential than the previous one.

On a semi-unrelated note though, the Source Engine is also a great example of how powerful a game engine can be in the right hands. Valve has used their engine not only to make games but also to make offline tools such as the Source Filmmaker and Hammer Editor which they could then use to improve their production pipelines. The Source Filmmaker allows for easy development of in-engine cutscenes by allowing them to create a scene in the engine like you would with Maya, but it instead uses the engines lighting systems. The best example of its potential is the Meet the Team videos for Team Fortress 2. Meanwhile, the Hammer Editor functions as the company's in-house map editing tool, Not only does it allow users to create the shape of the map, it also allows them to set up things such as game triggers which the engine can then use to construct the full level experience.

Overall, the Source Engine is a fantastic piece of technology, in my mostly biased opinion. Unfortunately, I have not ever used it so I could not make any comments on how user-friendly it is or some of the downsides it has developing for it. I hope you've enjoyed reading this rambling blog!

Cheers!

Wednesday, 2 April 2014

Curtain Call

So this is the last blog for the class, 10/10 (probably wouldn't read again), the big finale...I know that in the past I've generally tried to talk about stuff that I find really interesting like Finite State Machines or Valve's water shader but I'm not going to take that approach with this blog...Nor will I play it safe and talk about some topic we were taught in class and just repeat everything that was said with maybe a few additions. Instead I plan to do two things in one today: I'm going to do a review of my own and talk about myself!...By that I mean I'm going to talk about what I've learned, what I plan to do/learn and if I feel I have the time then talk about why I plan to do it.

What I learned in class

So let's talk about the fun things that I've learned from this class as well as from what I've learned doing research because of the class. To start things off, FBOs are a fantastic concept to me. They can let you do so much and can lead to some pretty interesting stuff. The flexibility for things like Bloom, Thresholding and Tone-mapping are fine examples of what you can do with FBOs and reasons for why I'm quite glad to have learned them!

Another topic is Normal Mapping. The fact that they allow you to get much more out of lower-resolution models is fantastic as it gives you a similar quality of visual without putting as much strain as on the GPU and you can load in models much faster! The idea behind normal mapping also gave way to a few other ideas early on in the term so it had the nice side-effect of sowing the seeds for some of my other ideas.

Next up is reflection, this is pretty easy as it's just rendering the scene from a different point of view but it can allow for quite a bit of flexibility (i.e. Mirrors and water surfaces among other things).

Then there's also Fluid Dynamics, Implicit Surfaces, Shadow Mapping and Deferred Rendering which are all interesting topics that I've only had the time to read up on but not actually apply sadly...Though that will hopefully change within the next 12 months.

What I plan to do and learn

So some of this stuff has already been alluded to in previous blogs but I'm going to reiterate anyway. Within the next year I plan to apply most of the stuff that I have yet to apply, primarily Shadow Mapping and Deferred Rendering. I also hope to perform Mesh Skinning with Dual Quaternions as it seems to be the most efficient method at this point in time as well as having the least amount of artifacts. I also hope to learn Inverse Kinematics as it can provide a lot of useful features as evidenced by its use in Uncharted as we learned last year. I also hope to apply a few other techniques which we learned in class like Depth of Field and SSAO...Long story short, I want to be able to say that I completed every single question we were given for homework, even the insane upgrades.

On top of all of this I have two major goals: Getting my style of programming up to a more professional level where I can better manage memory and have it well optimized (ideally able to use DOD where it should be used) and I want to have started work on my engine without it looking like I stopped the moment school started up...Ideally in a years time I'd be past my lowest goal of a quarter into the development.

Why am I doing this? (Warning: Some of this stuff may get weird)

I'm sure this is a question that has been asked about me many times as I often seem to take harder paths than most (i.e. I set out with the intention of writing blog posts about everything BUT what we covered in class and last term I made a point of doing harder things like skeletal animation before we even got close to touching the concepts) and I've also shown a tendency for taking on more than I can chew. So why would I do all of this? Well, it goes back to two very simple questions, ones that I've seen quite a few students ask and be asked at one point or another: "Why are you here?" and "Why do you want to make games?" They're innocent enough questions and everyone should definitely be asked them at one point or another because it really makes you reevaluate yourself and your goals in life.

So what're my answers to that question? Well you see: Video games as a whole have done a lot for me in the past. They've picked me up when I was down and they've given me the strength of will to get past the obstacles in my life. They've also brought me close to a lot of people, many of whom have become like close family members despite the distances between us. They've done so much for my life and, knowing that, I can safely say that I want to do that for someone else. I could probably go on to talk about a lot more but there's a time and place for everything and any further statements may look like I'm trying to get some special treatment.

So why am I saying this? Well, in this current situation I feel that it's best to voice my personal motivations as it adds a layer of perspective to all questions that I ask regarding programming and game design. Suddenly all my questions about engines and such as well as choosing to look into topics that go beyond the class material goes from idle curiosity to goal-driven research that can help me out when I try to start my own company.

I hope you've enjoyed reading this long, dragged out post that's really just me ranting incoherently because I have sincerely enjoyed this class! In the end it has brought me one step closer to my end goals, given me a lot of wonderful information and experiences to draw on in the future and has really helped me improve drastically as a developer. Thank you for a wonderful term!

Until next term, cheers!

Cameron Nicoll

P.S. Managed to get this in 7 minutes before April 3rd, totally didn't make my closing statements from my last blog look like a lie!

What I learned in class

So let's talk about the fun things that I've learned from this class as well as from what I've learned doing research because of the class. To start things off, FBOs are a fantastic concept to me. They can let you do so much and can lead to some pretty interesting stuff. The flexibility for things like Bloom, Thresholding and Tone-mapping are fine examples of what you can do with FBOs and reasons for why I'm quite glad to have learned them!

Another topic is Normal Mapping. The fact that they allow you to get much more out of lower-resolution models is fantastic as it gives you a similar quality of visual without putting as much strain as on the GPU and you can load in models much faster! The idea behind normal mapping also gave way to a few other ideas early on in the term so it had the nice side-effect of sowing the seeds for some of my other ideas.

Next up is reflection, this is pretty easy as it's just rendering the scene from a different point of view but it can allow for quite a bit of flexibility (i.e. Mirrors and water surfaces among other things).

Then there's also Fluid Dynamics, Implicit Surfaces, Shadow Mapping and Deferred Rendering which are all interesting topics that I've only had the time to read up on but not actually apply sadly...Though that will hopefully change within the next 12 months.

What I plan to do and learn

So some of this stuff has already been alluded to in previous blogs but I'm going to reiterate anyway. Within the next year I plan to apply most of the stuff that I have yet to apply, primarily Shadow Mapping and Deferred Rendering. I also hope to perform Mesh Skinning with Dual Quaternions as it seems to be the most efficient method at this point in time as well as having the least amount of artifacts. I also hope to learn Inverse Kinematics as it can provide a lot of useful features as evidenced by its use in Uncharted as we learned last year. I also hope to apply a few other techniques which we learned in class like Depth of Field and SSAO...Long story short, I want to be able to say that I completed every single question we were given for homework, even the insane upgrades.

On top of all of this I have two major goals: Getting my style of programming up to a more professional level where I can better manage memory and have it well optimized (ideally able to use DOD where it should be used) and I want to have started work on my engine without it looking like I stopped the moment school started up...Ideally in a years time I'd be past my lowest goal of a quarter into the development.

Why am I doing this? (Warning: Some of this stuff may get weird)

I'm sure this is a question that has been asked about me many times as I often seem to take harder paths than most (i.e. I set out with the intention of writing blog posts about everything BUT what we covered in class and last term I made a point of doing harder things like skeletal animation before we even got close to touching the concepts) and I've also shown a tendency for taking on more than I can chew. So why would I do all of this? Well, it goes back to two very simple questions, ones that I've seen quite a few students ask and be asked at one point or another: "Why are you here?" and "Why do you want to make games?" They're innocent enough questions and everyone should definitely be asked them at one point or another because it really makes you reevaluate yourself and your goals in life.

So what're my answers to that question? Well you see: Video games as a whole have done a lot for me in the past. They've picked me up when I was down and they've given me the strength of will to get past the obstacles in my life. They've also brought me close to a lot of people, many of whom have become like close family members despite the distances between us. They've done so much for my life and, knowing that, I can safely say that I want to do that for someone else. I could probably go on to talk about a lot more but there's a time and place for everything and any further statements may look like I'm trying to get some special treatment.

So why am I saying this? Well, in this current situation I feel that it's best to voice my personal motivations as it adds a layer of perspective to all questions that I ask regarding programming and game design. Suddenly all my questions about engines and such as well as choosing to look into topics that go beyond the class material goes from idle curiosity to goal-driven research that can help me out when I try to start my own company.

I hope you've enjoyed reading this long, dragged out post that's really just me ranting incoherently because I have sincerely enjoyed this class! In the end it has brought me one step closer to my end goals, given me a lot of wonderful information and experiences to draw on in the future and has really helped me improve drastically as a developer. Thank you for a wonderful term!

Until next term, cheers!

Cameron Nicoll

P.S. Managed to get this in 7 minutes before April 3rd, totally didn't make my closing statements from my last blog look like a lie!

Tuesday, 1 April 2014

Flowing Fluids

THE PENULTIMATE BLOG!

Yeah, I just needed to get that off my chest. I mean, how often do you get to use the word penultimate? So for this penultimate blog I was trying to think of unique and fun topics to discuss. One certain professor recommended Implicit Surfaces and I won't lie, they made very little sense to me. I have yet to develop the necessary skills for working through the usual jargon you find in academic papers. So instead I'm going to talk about another topic: Fluid Dynamics!

So I had looked into Fluid Dynamics before, namely because when you have your prof saying that you shouldn't do something from scratch that's either a warning or a challenge...I chose to see it as a challenge (which I, regrettably, could not meet). So I've only really looked at some of the basics of Fluid Dynamics and as such my description won't exactly be up the level you would expect from something like, say, an academic paper. So to begin with, a simple way of looking at Fluid Dynamics is by looking at it in terms of a grid. Each block on the grid is a particle that is a part of the fluid and contains information such as colour, direction and magnitude. As the simulation runs through its updates, we can use the information in each particle to figure out what each particle should look like (i.e. should the colour become more vivid because more force is accumulating or is the particle untouched and so it should have no colour).

One way of sort of simplifying fluid dynamics is to think about it in terms of a scalar field (Field A) and a vector field (Field B). Field A is used to denote what colour each point in the simulation should be and then we can use bicubic interpolation between each one to figure out the colour on a per-pixel basis during the render process. This will result in a smoother colour blend for things such as smoke. Field B is then used purely for calculations and is ignored during the render step. In order to figure out how each particle changes we need some way of remembering the direction that the particle is travelling in as well as the force behind it in order to calculate the colour. This is important for things such as diminishing colour as the particle 'moves'.

This more or less my understanding of the very basics of fluid dynamics. There is the option to use more equations to create more realistic dynamics such as the navier-stokes equation which takes into account other factors such as pressure. Unfortunately, I will not be going into these at this point in time as I feel that I've still got quite a bit to learn in that area.

So yeah, that's more or less my understanding of Fluid Dynamics summed up in what seems to be one of my shorter blogs. I was kind of hoping that this one would come across as bigger and more interesting but I guess I didn't maintain the momentum from the last one. Oh well, they can't all be winners.

You can expect to see my final blog probably tomorrow/later today or tomorrow-tomorrow (as in April 3) but cheers for now!

Friday, 28 March 2014

Start Your Engines!

So I'm going to do my usual thing and work against the grain here by talking about something that's kind of related to our class but at the same time it's not something that we'd talk about at all really: Game Engines! This is sort of a field that's captured my interest and it can pertain to graphics as there are some engines specifically designed for rendering (i.e. OGRE) so I'm not entirely off the rails with this! Now, I get the feeling that this is going to be a rather long blog as I'm going to be starting off with just a run through of what Game Engines are and what they do, some examples of engines and a little bit about their development cycle (Source and Crystal Tools) and finally some of my own plans *insert evil laugh here* so without further preamble...

Disclaimer: I'm still pretty ignorant when it comes to a lot of this stuff so don't expect grade-A, dead on explanations.

Okay so what is a Game Engine? It's pretty much all there in the title: It's the engine for the game, it's the power house which can help get a game moving from the get go. In a way, it's pretty similar to a Framework (which are used as a jumping off point for engines) however it comes with a lot more functionality that is designed to streamline the development cycle. One of the major things for engines is that they usually come equipped with the basic necessities for games such as audio, collision and so on. The three basic types of game engines are all-in-one engines, framework-based engines (such as Source) and graphics engines. From what I've understood the all-in-one engines are the ones sort of like Unity where you can make pretty much everything right off the bat with it without needing anything extra while the framework-based ones usually end up spawning some tools that can be utilized with them (i.e. The Source Engine comes equipped with the Hammer Editor which is a tool specifically for building levels that will work with in the engine, as evidence by the ever-increasing list of custom TF2 maps). Then graphic engines just specialize in the rendering process of games and can be used for pre-existing rendering techniques as well as managing scenes and what not. That's more or less a jumbled and quick breakdown of Engines

So like I had mentioned earlier I'm going to talk a bit about two game engines: One I know a fair bit about and the other which I don't know nearly as much about. Let's start with the one that I do know: Valve's Source Engine. So when you purchase a Source game (such as Team Fortress 2, Half-Life, etc.) you get access to a few of the Source engine tools. These include things such as the Hammer Editor, a model viewer, as well as some tools for making mods and so on. The Source Engine is constantly being updated and with it every game that is supported by it, as many owners of Valve games on Steam are aware of when they get little updates for all their games that generally don't do anything to the game itself. This engine is in constant development and because it's an in-house engine for Valve that they can constantly edit it with their own new tricks such as when they introduced a new method of rendering water in Left 4 Dead 2! One key difference between the development of the Source Engine and the next engine I'm going to go into to is that the Source Engine was initially built with a specific game in mind: Half-Life. This gave the team a clear goal when designing much of the features as they knew that they would have to prepare it to be specialized for first-person shooters; a fact which has held true decades later but has been expanded on with time.

So the next engine I'm going to talk about is one that I don't have a whole lot of info on and serves more as a warning for why you should have a single game in mind from the start: Square Enix's Crystal Tools Engine. So this engine has produced some pretty visually impressive games (the entire Final Fantasy XIII collection) and seems to work pretty well...Though, reading up on some stuff revealed that it had a somewhat shaky start off. The engine was initially built with Final Fantasy XIII in mind as the title for it to support but it quickly started taking on multiple projects within the company, each one demanding different specifications and causing the development team to loose track of what they needed to do. This would eventually result in them making it so that some games couldn't even include their assets! So I don't have much to say on this matter other than the fact that this does solidify the importance of having a game in mind from the start.

So that's my technical rambling that's probably missing the mark in a few places...If you want to verbally tear apart everything I've said and you happen to be Dr. Hogue or one of the more well-versed students at UOIT then feel free to tweet me and we can arrange a time to chat over coffee or something!

Now then, on to my last part: My own plans with this information!

...It's not that much of a secret, if you haven't figured it out now then that's genuinely surprising. Obviously I plan to try and begin my own game engine this coming summer. I don't plan to use it for GDW but instead for my own purposes outside of school. I've begun working on a game idea for this engine to be based around but it's still very early in the pre-production stage and with exams and Level-Up looming on the horizon I don't have much time to properly mull things over, so development is pretty much relegated to when I have spare brainpower. The way that things are looking to go, I'll have to put heavier emphasis on making smooth close-combat with irregular shapes which will require creating a more elaborate collision system that works more along the lines of your standard shooter (where each limb has its own box and such) as well as accounting for different shapes. Ideally I'd want to make something similar to Unity where I can toggle between different types of collision but simultaneously be able to add a lot of depth to it. Another heavy emphasis will be on real-time cutscene rendering so I'd like to have something set up that would allow me to easily create a camera track with speed control. I'd also want to put a lot of work into designing a good base for AI as I'll need support for friendly combat AI.

I apologize for the large chunk of text above as this was more me trying to plan it out for myself as well as I find I do develop my ideas better my trying to explain them to others. I'd rather not edit the above area or delete it as I think it does explain a bit more about me as a person...But to summarize it:

Things I will need to emphasize

-A larger variety of collision with varying depths of complexity

-A method of better controlling cutscenes

-A strong AI-base

I also plan on making my engine a framework-style engine so that I can produce some tools to better work with it. Ideally I'd want to create the following:

-A model viewer/render tester: So that I can experiment with different shaders while making sure they run in-game and produce the intended results

-A custom level editor: Ideally one that can take in OBJs and allow me to set up different types of triggers and such

-A cutscene creator: Something similar to the level editor except that I could create a track for the camera to follow and control its speed as it progresses. I also would like to make it so that I could export custom animations for the scene as well as load in level layouts so that I could experiment with moving characters around and seeing how the scene plays out before exporting it as a file type that's easy for the engine to read in at the necessary time.

I recognize that a few of these are probably very unrealistic given my current skill level and I'll probably have a lot of optimization errors in my first pass. I also know that it won't even be close to being a quarter finished by the end of the summer but that doesn't really matter in the end. At the end of the day, even if it doesn't work out I'll still hopefully have learned something or driven myself so crazy that it won't matter anymore!

On that note I'm going to wrap up this post. Now I just need to think of another topic to write about as I already have my final post planned out to some degree. Cheers for now!

Side-note: I am well aware that we take Game Engines in 3rd year, I'll be picking up the copy of my textbook from home after Level-Up. I also know I could have just waited before starting something like this, but as Dan Buckstein can attest: I very rarely wait until it gets taught in class.

So like I had mentioned earlier I'm going to talk a bit about two game engines: One I know a fair bit about and the other which I don't know nearly as much about. Let's start with the one that I do know: Valve's Source Engine. So when you purchase a Source game (such as Team Fortress 2, Half-Life, etc.) you get access to a few of the Source engine tools. These include things such as the Hammer Editor, a model viewer, as well as some tools for making mods and so on. The Source Engine is constantly being updated and with it every game that is supported by it, as many owners of Valve games on Steam are aware of when they get little updates for all their games that generally don't do anything to the game itself. This engine is in constant development and because it's an in-house engine for Valve that they can constantly edit it with their own new tricks such as when they introduced a new method of rendering water in Left 4 Dead 2! One key difference between the development of the Source Engine and the next engine I'm going to go into to is that the Source Engine was initially built with a specific game in mind: Half-Life. This gave the team a clear goal when designing much of the features as they knew that they would have to prepare it to be specialized for first-person shooters; a fact which has held true decades later but has been expanded on with time.

So the next engine I'm going to talk about is one that I don't have a whole lot of info on and serves more as a warning for why you should have a single game in mind from the start: Square Enix's Crystal Tools Engine. So this engine has produced some pretty visually impressive games (the entire Final Fantasy XIII collection) and seems to work pretty well...Though, reading up on some stuff revealed that it had a somewhat shaky start off. The engine was initially built with Final Fantasy XIII in mind as the title for it to support but it quickly started taking on multiple projects within the company, each one demanding different specifications and causing the development team to loose track of what they needed to do. This would eventually result in them making it so that some games couldn't even include their assets! So I don't have much to say on this matter other than the fact that this does solidify the importance of having a game in mind from the start.

So that's my technical rambling that's probably missing the mark in a few places...If you want to verbally tear apart everything I've said and you happen to be Dr. Hogue or one of the more well-versed students at UOIT then feel free to tweet me and we can arrange a time to chat over coffee or something!

Now then, on to my last part: My own plans with this information!

...It's not that much of a secret, if you haven't figured it out now then that's genuinely surprising. Obviously I plan to try and begin my own game engine this coming summer. I don't plan to use it for GDW but instead for my own purposes outside of school. I've begun working on a game idea for this engine to be based around but it's still very early in the pre-production stage and with exams and Level-Up looming on the horizon I don't have much time to properly mull things over, so development is pretty much relegated to when I have spare brainpower. The way that things are looking to go, I'll have to put heavier emphasis on making smooth close-combat with irregular shapes which will require creating a more elaborate collision system that works more along the lines of your standard shooter (where each limb has its own box and such) as well as accounting for different shapes. Ideally I'd want to make something similar to Unity where I can toggle between different types of collision but simultaneously be able to add a lot of depth to it. Another heavy emphasis will be on real-time cutscene rendering so I'd like to have something set up that would allow me to easily create a camera track with speed control. I'd also want to put a lot of work into designing a good base for AI as I'll need support for friendly combat AI.

I apologize for the large chunk of text above as this was more me trying to plan it out for myself as well as I find I do develop my ideas better my trying to explain them to others. I'd rather not edit the above area or delete it as I think it does explain a bit more about me as a person...But to summarize it:

Things I will need to emphasize

-A larger variety of collision with varying depths of complexity

-A method of better controlling cutscenes

-A strong AI-base

I also plan on making my engine a framework-style engine so that I can produce some tools to better work with it. Ideally I'd want to create the following:

-A model viewer/render tester: So that I can experiment with different shaders while making sure they run in-game and produce the intended results

-A custom level editor: Ideally one that can take in OBJs and allow me to set up different types of triggers and such

-A cutscene creator: Something similar to the level editor except that I could create a track for the camera to follow and control its speed as it progresses. I also would like to make it so that I could export custom animations for the scene as well as load in level layouts so that I could experiment with moving characters around and seeing how the scene plays out before exporting it as a file type that's easy for the engine to read in at the necessary time.

I recognize that a few of these are probably very unrealistic given my current skill level and I'll probably have a lot of optimization errors in my first pass. I also know that it won't even be close to being a quarter finished by the end of the summer but that doesn't really matter in the end. At the end of the day, even if it doesn't work out I'll still hopefully have learned something or driven myself so crazy that it won't matter anymore!

On that note I'm going to wrap up this post. Now I just need to think of another topic to write about as I already have my final post planned out to some degree. Cheers for now!

Side-note: I am well aware that we take Game Engines in 3rd year, I'll be picking up the copy of my textbook from home after Level-Up. I also know I could have just waited before starting something like this, but as Dan Buckstein can attest: I very rarely wait until it gets taught in class.

Saturday, 22 March 2014

Ramble Ramble Ramble

So last time on this blog: I had mentioned either talking about mo-cap data or portals and did a lot of ranting on AI! This time: I'm going to talk incoherently about different stuff and doing the Portals for Portal will randomly be explained somewhere in the middle. So if I'm going to ramble then what's the point of this blog post, you ask? Simple! I'm going to take this chance to talk about a lot of small things which I don't think I could use for an entire blog post or which would mean retreading ground I've already covered. So without further adieu...

Reflections!

What do I mean by reflections? I mean making something like a mirror that renders in real time. Doing this is a pretty simple 2-part process so I'll just walk through it with some explanations along the way.

Part 1 - Render the scene from the point of view of the mirror, to do this you want to first get the vector between the camera and the mirror in question, this gives you your viewing angle which you will then reflect along the normal vector of the mirror to give you a reflection viewing angle. The actual equation you would want to use here is: V - 2 * (V dot N) * N. This vector is the direction that your mirror camera is looking at, once you have this you simply calculate the Up vector and you can generate a View Matrix for your mirror. Use this view matrix to render the entire scene once, storing it into a Framebuffer Object. Now that you have the scene the mirror sees, move on to the next step!

Part 2 - Now that we have the scene that the mirror sees, we render the scene like we do normally and apply the FBO texture from the previous pass to the mirror: It will now display the reflection in real time and it will change based on the position of the player's camera.

Now that we have reflections working, we can apply some fun stuff to it. For example, we could make a rippling surface as if it's on a lake, to do this we have multiple options: We could use a tesselation shader and use a ripple texture as a displacement map; We could use an algorithm to change how we sample the image, making it distort in places to simulate a ripple appearance; We could also use surface normals to change how the light reflects off of it to make it appear like there's troughs and crests to fool the player into thinking it's rippling.

Portals!

So the portals that we did in class are pretty similar to how you would do a reflection: You're just rendering the scene from a different point of view. In this case you're taking the direction vector between the viewer's position and the position of the portal they're looking through and rotating it so that it matches the orientation of the portal they're looking out of. Then you render the scene from the position of the out-Portal with the direction you calculated previously to a FBO and apply that FBO's texture to the in-Portal.

For added effects such as the rings they have in Portal, make a texture and it so that you have a pass through colour (i.e. just set the alphas to be 0) and a discard colour (i.e. black, blue, etc.) and when you go to render the portal, send in the FBO texture as well as the portal texture. While sampling, if the sampled UV for the portal texture is the discard value then nothing is drawn while if it's the pass through colour the FBO is rendered and then anything else is blended based on alphas. This results in the fragment shader cropping away any unwanted pieces so that it blends in smoothly with anything behind it such as the walls.

Uniquely Shaped Meters!

So this is something I found interesting, it's a technique for creating functioning meters (i.e. player health) that have unique shapes such as being circular or just a wavy line. Basically what happens is you have two textures which are being sampled from: The actual, rendered texture and a 'cutoff' texture. The cutoff texture matches up with the rendered texture and is just a smooth gradient from 1.0 to 0.0 in the direction you want the meter to go. When you render the meter you would pass in a normalized value to use as your cutoff value.

| Cutoff Texture |

So say you have a cutoff texture that just goes from black to white in a smooth gradient and it's rendering the player health, the player currently has 750/1250HP. This means that the player is currently at 60% health and so you would pass in a value of 0.6. Now, when it goes to check if it should render the health meter, it will sample from the cutoff texture and if the value is 0.6 or less, it will then proceed to sample from the render texture and output the appropriate colour. If the value is greater, it will simply not render the fragment. I like this technique a lot because it just gives you a lot of versatility in how you might want to render things such as timers or health bars or ammo...It just gives you and your artists a lot of flexibility!

Dual Quaternions!

Yeah...No...Not this time. They warrant a full blog post.

So that's my rambling for the night, I hope you enjoyed me try to blunder my way through how to do simple graphics stuff that I find kind of interesting and fun...

I actually don't have anything witty to say here so I'm just going to put a picture of Phong just to make this blog look longer than it is. Cheers!

Saturday, 15 March 2014

AI...Whats the A stand for?

So I'm starting to run out of topics that interest me for Graphics (and which haven't been covered pretty well in class) so I'm going to take a bit of a break and talk about a programming topic near and dear to my heart: AI!

So last term I talked to Dan Buckstein about some AI stuff and he put me down an interesting path, one filled with weirdly named terms like Fuzzy Logic, and I've gotten the chance to apply a few the fun concepts that I picked up from him. My absolute favourite is Finite State Machines, which are basically anything that can have multiple states which the system will transition between once certain conditions are met. The most basic example of a FSM is a light which switches between on and off when the light switch is put into different positions. Of course, they can get a lot more complex than that (for example, games are FSMs themselves) but for this blog I'll be talking strictly in terms of how they can be applied for AI.

For our enemies in our GDW game, I use a combination of polymorphism and states allowing me to reduce a large portion of the update function for each enemy to just one simple line:

currentState->Execute(dt, this);